As some of you might know, the first bot which I've started was the curation bot aicu. I haven't forgotten about it, and it's been running steadily since it's inception. Honestly, that's a lie, I've pretty much forgotten about my curator bot for a (couple) month(s) or so, and it's been trucking on, undisturbed on an old cheap "server". Selecting, upvoting and resteeming promising posts. I remembered it a couple of weeks before the last hard fork.

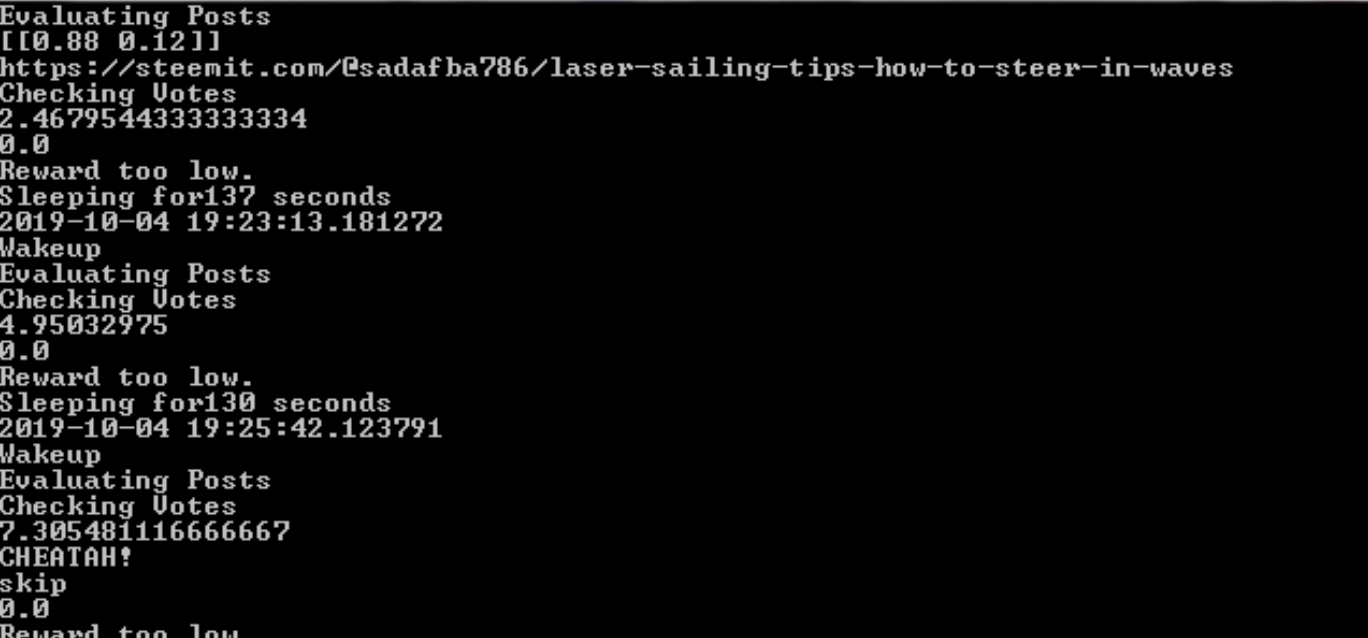

And to my surprise, I was greeted with this sight. (at least a similar one, I didn't screenshot it back then)

It was still running. Undisturbed from power outages or network disconnects. Considering that I'm watching a Warhammer 40,000 Mechanicus let's play in the background, I think I have to put a Necron Artwork as Aicu's profile pic.

What now?

I've neglected my bot for some while now, I put some more SP into my account and evaluated improvements to the field of Natural Language Processing. Which were actually quite numerous, considering that I've developed Aicu just a couple months ago. Although I haven't been using up to date methods back then either.

That's what I'm going to tackle now, updating Aicu's decision machinery from 2000 NLP tech to state of the art 2019 Natural Language Processing Techniques. It's been just 19 years since the start of 2000, but NLP has been moving and improving at a breakneck pace since then. The most obvious improvements came in the form of deep learning models, like the massive transformer-based approach of BERT. I'm still not 100% convinced that BERT just isn't a very useful case of overfitting, but I'll try it nonetheless. I'm also a bit worried that BERT won't run with high performance on an old integrated chipset graphics card Aside from just brainlessly using BERT, I want to improve the performance of my curator bot on all fronts, Syntax, Semantics as well as Image Evaluation. The last part I haven't implemented yet. And it's high on my TODO list. But before that, and considering that I'm no expert in terms of computer vision and the like I'll focus on the language model.

Much work to be done, and so little time. Especially since I want to improve my Steem Monsters Bot as well.

Performance

I don't have a script ready to evaluate the performance of AICU in a more insightful way, so I have to borrow the curation details from steemworld.org

Looks pretty good, it seems my model was able to generalize quite well and learn to identify high-quality posts. Although I never trained it on upvote value because I don't think the post upvote value reflects post quality. A high-quality post can get a high upvote value, but it can also just use bid bots to do so.

Aside from that, I also suspect that some people use Aicu as a "curation trail" and either auto vote on its upvotes or selectively browse through the resteems. That way the authors selected get an ever greater recognition, which I think is great. Let's see how aicu the curator develops over the coming months.