And once more my bot finishes in Champion II. This time my update is going to be kind of short, but I'll get into everything interesting.

Bot's performance

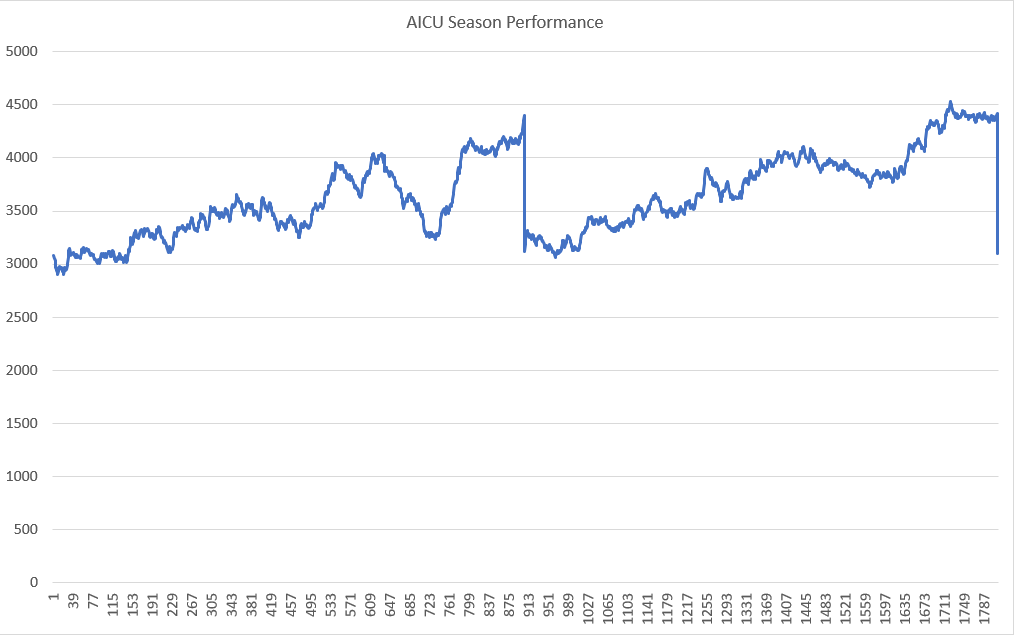

First and foremost: how did my bot perform this season.

As you can see it performs pretty much the same as last season. It reached a slightly higher high, but aside from that no surprises here.

How to improve Steem Monsters Aicu further

Right now I'm left with two options: train Aicu on some sort of reinforcement learning algorithm. In my opinion, one of the Temporal Difference learning schemes should be sufficient for this. E.g. double Q learning. If I set this algorithm against my current bot, chances are that I'll receive a more robust bot that plays better. One issue with this approach is, that it will take too much time with my current MCTS bot. I let Aicu compute for 100 seconds to find a counter team to the last 5 enemy teams. If I lower this timer the teams generated won't be as strong. But with 100 seconds for each iteration, a dynamic playoff between the reinforcement learning system and Aicu won't work.

On the other hand comparably simple approaches seem to be doing extremely well in Steem Monsters. For example: take a sufficiently large sample of matches and play the teams which are winning the most for a given ruleset/mana cap combination. I guarantee you, you will get pretty far with a decent deck. Up until this point, I ignored this approach because I wanted to learn more about reinforcement learning I tried to improve this system as far as possible. But if I want to train a bot using Temporal Difference methods I can either use a large match set and generate samples from there, simulate the battles and let the bot learn from there. Or I can build a bot that works similarly to the "most frequent winning teams" approach and let it generate matches for the training.

I think I can leverage a method which is used in search engines to make this approach more efficient. It's called a Trie. Or Prefix Tree.

A Trie compresses the search space by just saving the data until the point where it differs. This speeds up search significantly and allows to save search results efficiently. If I use cards instead of characters and use transition probabilities between nodes I can build a team selection strategy for each ruleset/mana cap combination without saving every single team. And the end result should be comparable, and also more flexible. A quick check whether this team could win against my opponent some adaptions to it if necessary and I might have a more suitable approach for generating matches for training Temporal Difference algorithms or a better approach for a Steem Monsters bot in general.

Aside from that, I can also use the MCTS to search those tree structures build from winning teams to find the best team for the opponent.

While writing up this post I had another idea which I'm testing right now. For now, my MCTS had to defeat a fixed set of 5 teams for each team that is evaluated. The issue with that approach is, that if a weak team is within those 5 opponent teams then it will get rewards for weaker teams as well. And it's slower as well because it needs to run 5 simulations for each team. Now onto my idea: what if I increase the pool of monsters that the MCTS has to defeat, but select for each iteration a random team from this larger set. I'd argue that this approach allows the MCTS to generalize better. It can't overfit on one team and it needs to find a team which is very flexible and powerful. I'm curious whether that approach works. For now, I don't see a difference. But the bot managed to defeat steallion, previously number 4. Which I don't remember every defeating. So maybe it's off to a promising start.

Giveaway

Alright, onto the giveaway. This giveaway will work as usual, but it's going to be epic and legendary. Comment down below with your SM username in the comment if you want to participate. The cards will be distributed randomly after the payout of this post is finished. So seven days in total for you to enter:)

|  |  |  |  |

| Sacred Unicorn | Black Dragon | Phantom Soldier | Imp Bowman | Daria Dragonscale |